According to Memes Consulting, the growing competition between LiDAR and other sensor technologies—such as cameras, radar, and ultrasound—is driving the need for more advanced sensor fusion. This, in turn, demands careful selection of photodetectors, light sources, and MEMS micromirrors to ensure optimal performance in autonomous systems.

As sensor technology, imaging capabilities, radar, LiDAR, electronics, and artificial intelligence continue to evolve, a wide range of advanced driver assistance system (ADAS) functions have become available. These include collision avoidance, blind spot monitoring, lane departure warnings, and parking assistance. These systems rely on sensor fusion to synchronize their operations, enabling fully automated or unmanned vehicles to perceive their surroundings, alert drivers to potential hazards, and even take evasive actions without human input.

For self-driving cars, the ability to detect and identify objects at high speeds is essential. They must quickly construct 3D maps of the road environment up to 100 meters ahead and generate high-resolution images at distances of up to 250 meters. In the absence of a driver, the vehicle’s AI must make quick and accurate decisions based on real-time data.

One fundamental technique used in this process is Time-of-Flight (ToF), which measures the time it takes for a pulse of energy to travel from the vehicle to a target and back. Once the speed of the pulse through the air is known, the distance to the object can be calculated. This pulse can be ultrasonic (sonar), radio (radar), or light-based (LiDAR).

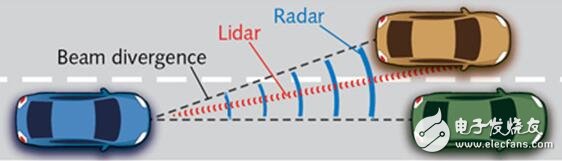

Among these ToF technologies, LiDAR offers the highest angular resolution, making it the preferred choice for many applications. Unlike radar, which has larger beam divergence and lower resolution, LiDAR produces sharper images that allow better discrimination between closely spaced objects. This level of detail is especially crucial in high-speed environments where quick reaction times are necessary to avoid collisions.

When it comes to LiDAR, selecting the right laser source is critical. In a ToF LiDAR system, a laser emits a light pulse with a duration τ, which triggers the internal timing circuit at the moment of transmission. When the reflected pulse reaches the photodetector, an electrical signal disables the clock, allowing the system to measure the round-trip time Δt and calculate the distance R to the object.

If the laser and photodetector are positioned together, the measured distance R depends on two main factors: the speed of light in a vacuum (c) and the refractive index (n) of the medium through which the light travels (which is close to 1 in air). These factors affect the accuracy of the distance measurement, particularly when the laser spot size is larger than the target being detected.

The uncertainty in measuring the spatial width w (w = cτ) of the pulse and the time jitter δΔt contribute to the overall distance resolution ΔR. To achieve a resolution of 5 cm, the required time jitter δΔt is approximately 300 picoseconds, and the pulse duration τ should also be around 300 picoseconds. This means that ToF LiDAR systems require photodetectors with low time jitter and lasers capable of emitting very short pulses, such as picosecond lasers.

Figure 1 illustrates how the beam divergence angle depends on the ratio of the aperture size and the wavelength of the transmitting antenna (for radar) or lens (for LiDAR). A larger ratio results in a wider beam and lower angular resolution. As shown, radar (black) struggles to distinguish between two cars, while LiDAR (red) provides clear separation.

For automotive LiDAR designers, choosing the appropriate wavelength is one of the most critical decisions. Several factors influence this choice, including human eye safety, atmospheric interaction, available laser options, and compatible photodetectors.

The two most commonly used wavelengths are 905 nm and 1550 nm. While 905 nm allows for the use of silicon-based photodetectors, which are cost-effective, 1550 nm is safer for the human eye and allows for higher energy per pulse, which is beneficial for the overall photon budget.

Atmospheric conditions, such as rain or fog, affect the performance of LiDAR systems by causing scattering and absorption of light. These effects vary with wavelength, making the choice of wavelength even more complex in real-world environments. Although 1550 nm experiences stronger water absorption, 905 nm generally suffers less loss under typical weather conditions, offering better performance in many scenarios.

Aluminum-cased Electric Projection Screen

4-definition image quality, high-definition resolution of natural field of view, equipped with HDR high dynamic range image resolution, improving image contrast, wider open angle, consistent and clear visibility of images seen from different positions, as if you are in person.

Aluminum-Cased Electric Projection Screen,Hd Aluminum Shell Electric Projection Screen,Home Motorized Projector Screen,Motorized Electric Hd Projection Screen

Jiangsu D-Bees Smart Home Co., Ltd. , https://www.cI-hometheater.com