Big data and Hadoop: Understanding the Relationship

Is big data equal to Hadoop? Definitely not. However, many people immediately associate Hadoop with big data. In reality, data scientists use massive datasets to build models that benefit enterprises in ways previously unimaginable. But have we fully tapped into the potential of this data? Does it meet people’s expectations? Today, I’ll start by exploring Hadoop from the Hadoop project itself. As big data technology enters the era of supercomputing, it can be quickly applied to ordinary businesses. As it spreads widely, it will change the way many industries operate. However, there are still many misunderstandings about big data. Let's take a closer look at the relationship between big data and Hadoop.

What is Hadoop?

Hadoop is a distributed system framework designed for processing large volumes of data. It can be seen as a tool used for analyzing big data, often working alongside other components to collect, store, and compute large datasets. Let’s take a real-world Hadoop teaching project as an example for a more detailed analysis.

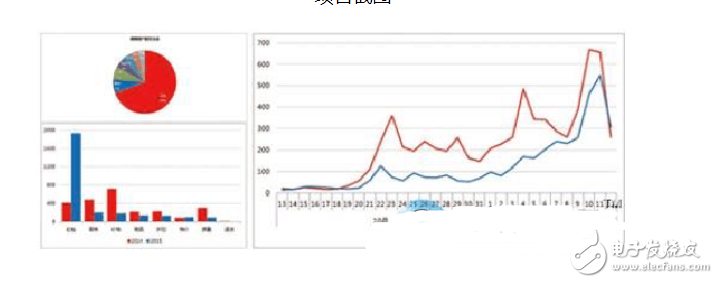

Project Description: This project combines Hadoop, Storm, and Spark to simulate Double 11 sales. It processes order details to calculate total sales, regional sales rankings, and later performs SQL analysis, data analysis, and data mining.

Stage 1 (Storm Real-Time Reporting):

- User orders are sent to the Kafka queue.

- Storm calculates real-time total sales and sales per province.

- Results are stored in HBase.

Stage 2 (Offline Reporting):

- Orders are stored in Oracle.

- Data is imported into Hadoop via Sqoop.

- MR and RDD are used for ETL cleaning.

- Hive and SparkSQL tables are created for future analysis.

- HQL is used to analyze business metrics, and results are saved in MySQL.

Stage 3 (Large-Scale Ad-Hoc Queries):

- Orders are stored in Oracle.

- Data is imported into Hadoop via Sqoop.

- MR is used to load data into HBase.

- HBase Java API enables ad-hoc queries.

- Solr integrates with HBase for multi-dimensional conditional queries.

Stage 4 (Data Mining and Graph Calculations):

- Orders are stored in Oracle.

- Data is imported into Hadoop via Sqoop.

- MR and RDD are used for ETL.

In general, Hadoop is suitable for big data storage and analysis, running on clusters with thousands to tens of thousands of servers. It supports PB-level storage. Typical applications include search, log processing, recommendation systems, data analysis, video and image analysis, and more.

About Big Data and Hadoop

Hadoop is just a big data processing framework. The learning curve is relatively low, requiring basic knowledge of Java, Linux, JVM, synchronization, and communication. Once you understand these, learning Hadoop becomes much easier.

Hadoop is actually a distributed file system. Data is spread across multiple servers. When data needs processing, all servers work together to aggregate intermediate results into a final output. This requires specific algorithms, unlike traditional methods that use the MapReduce framework. Taobao, for example, uses Hadoop. Think about the $11 billion transaction volume from last year—this is the kind of data that Hadoop handles.

Data mining covers a vast area and is a very hot field. However, it also presents significant challenges. It is closely related to machine learning and artificial intelligence. To learn it, you need not only a mathematical foundation but also patience and perseverance.

Three Common Misconceptions About Big Data

There are many misconceptions about what big data is and what it can do. Here are three common ones:

1. Relational databases cannot scale significantly, so they are not considered big data technology (wrong).

2. Hadoop or any MapReduce is always the best choice for big data, regardless of workload or use case (wrong).

3. The era of graphical management systems is over, and visual development will become a barrier to big data (incorrect).

Big Data Enters the Historical Stage

Big data has emerged for enterprises, partly due to reduced computing energy consumption and the ability to perform multiple processes. As memory costs continue to drop, companies can now store more data in memory than before. Connecting multiple computers to a server cluster is also easier now. These changes mark the arrival of big data, according to Carl Olofson, an IDC database management analyst.

"We not only have to do these things well, but we can afford the cost," he said. "In the past, some supercomputers had the ability to process multiple tasks, but they used specialized hardware, costing hundreds of thousands of dollars. Now, the same configuration can be achieved with standard hardware, making it faster, more efficient, and capable of handling larger volumes of data."

IDC believes that for big data to gain widespread recognition, the technology must be affordable and meet IBM’s 3V criteria: variety, volume, and velocity. Variety refers to both structured and unstructured data. Volume means the amount of data processed can be extremely large.

Olofson explained: “It depends on the situation. Sometimes a few hundred GB may be a lot. If I can analyze 300GB of data that took an hour in just one second, that’s a huge difference. Big data is a technology that meets at least two of these three requirements and is accessible to ordinary enterprises.â€

12V Battery,Lead Acid Battery,Lead Acid Deep Cycle Battery,Sealed Rechargeable Battery

JIANGSU BEST ENERGY CO.,LTD , https://www.bestenergy-group.com